AI Model Deployment: Kubernetes, Docker ve MLOps Stratejileri

AI model deployment, geliştirilen modellerin güvenilir, ölçeklenebilir ve sürdürülebilir şekilde production ortamına taşınmasıdır. Bu rehberde modern deployment stratejilerini inceliyoruz.

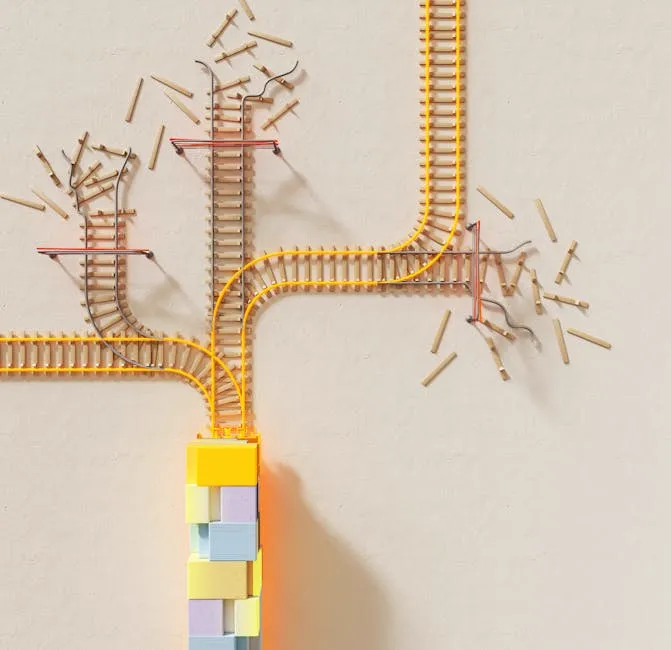

Deployment Patterns

1. Batch Inference

Toplu veri işleme, scheduled jobs:

Data Lake → Batch Job → Model Inference → Results Storage

2. Real-time Inference

Anlık API tabanlı tahminler:

Request → API Gateway → Model Server → Response

3. Streaming Inference

Sürekli veri akışı işleme:

Kafka Stream → Stream Processor → Model → Output Stream

4. Edge Deployment

Cihaz üzerinde inference:

Mobile/IoT Device → Optimized Model → Local Inference

Docker ile Model Containerization

Temel Dockerfile

1FROM python:3.11-slim 2 3WORKDIR /app 4 5# Sistem bağımlılıkları 6RUN apt-get update && apt-get install -y \ 7 libgomp1 \ 8 && rm -rf /var/lib/apt/lists/* 9 10# Python bağımlılıkları 11COPY requirements.txt . 12RUN pip install --no-cache-dir -r requirements.txt 13 14# Model ve kod 15COPY model/ ./model/ 16COPY src/ ./src/ 17 18# Port 19EXPOSE 8000 20 21# Healthcheck 22HEALTHCHECK \ 23 CMD curl -f http://localhost:8000/health || exit 1 24 25# Start command 26CMD ["uvicorn", "src.main:app", "--host", "0.0.0.0", "--port", "8000"]

Multi-stage Build

1# Build stage 2FROM python:3.11 AS builder 3WORKDIR /app 4COPY requirements.txt . 5RUN pip wheel --no-cache-dir --wheel-dir /wheels -r requirements.txt 6 7# Production stage 8FROM python:3.11-slim 9WORKDIR /app 10COPY /wheels /wheels 11RUN pip install --no-cache-dir /wheels/* 12COPY . . 13CMD ["python", "main.py"]

GPU Support

1FROM nvidia/cuda:12.1-runtime-ubuntu22.04 2 3ENV PYTHONDONTWRITEBYTECODE=1 4ENV PYTHONUNBUFFERED=1 5 6# Python kurulumu 7RUN apt-get update && apt-get install -y python3 python3-pip 8 9# PyTorch GPU 10RUN pip3 install torch --index-url https://download.pytorch.org/whl/cu121 11 12COPY . /app 13WORKDIR /app 14CMD ["python3", "inference.py"]

Model Serving Frameworks

FastAPI Server

1from fastapi import FastAPI, HTTPException 2from pydantic import BaseModel 3import torch 4 5app = FastAPI() 6 7# Model yükleme (startup) 8model = None 9 10@app.on_event("startup") 11async def load_model(): 12 global model 13 model = torch.load("model.pt") 14 model.eval() 15 16class PredictionRequest(BaseModel): 17 text: str 18 19class PredictionResponse(BaseModel): 20 prediction: str 21 confidence: float 22 23@app.post("/predict", response_model=PredictionResponse) 24async def predict(request: PredictionRequest): 25 if model is None: 26 raise HTTPException(500, "Model not loaded") 27 28 with torch.no_grad(): 29 output = model(request.text) 30 31 return PredictionResponse( 32 prediction=output["label"], 33 confidence=output["score"] 34 ) 35 36@app.get("/health") 37async def health(): 38 return {"status": "healthy", "model_loaded": model is not None}

TorchServe

1# Model archive oluşturma 2torch-model-archiver \ 3 --model-name mymodel \ 4 --version 1.0 \ 5 --model-file model.py \ 6 --serialized-file model.pt \ 7 --handler handler.py 8 9# Serve başlatma 10torchserve --start \ 11 --model-store model_store \ 12 --models mymodel=mymodel.mar

Triton Inference Server

1# config.pbtxt 2name: "text_classifier" 3platform: "pytorch_libtorch" 4max_batch_size: 32 5input [ 6 { 7 name: "INPUT__0" 8 data_type: TYPE_INT64 9 dims: [ -1 ] 10 } 11] 12output [ 13 { 14 name: "OUTPUT__0" 15 data_type: TYPE_FP32 16 dims: [ -1, 2 ] 17 } 18] 19instance_group [ 20 { count: 2, kind: KIND_GPU } 21]

Kubernetes Deployment

Basic Deployment

1apiVersion: apps/v1 2kind: Deployment 3metadata: 4 name: model-server 5spec: 6 replicas: 3 7 selector: 8 matchLabels: 9 app: model-server 10 template: 11 metadata: 12 labels: 13 app: model-server 14 spec: 15 containers: 16 - name: model-server 17 image: myregistry/model-server:v1.0 18 ports: 19 - containerPort: 8000 20 resources: 21 requests: 22 memory: "2Gi" 23 cpu: "1" 24 limits: 25 memory: "4Gi" 26 cpu: "2" 27 livenessProbe: 28 httpGet: 29 path: /health 30 port: 8000 31 initialDelaySeconds: 30 32 periodSeconds: 10 33 readinessProbe: 34 httpGet: 35 path: /ready 36 port: 8000 37 initialDelaySeconds: 5 38 periodSeconds: 5

GPU Deployment

1apiVersion: apps/v1 2kind: Deployment 3metadata: 4 name: gpu-model-server 5spec: 6 replicas: 2 7 template: 8 spec: 9 containers: 10 - name: model 11 image: myregistry/gpu-model:v1.0 12 resources: 13 limits: 14 nvidia.com/gpu: 1 15 nodeSelector: 16 accelerator: nvidia-tesla-t4 17 tolerations: 18 - key: "nvidia.com/gpu" 19 operator: "Exists" 20 effect: "NoSchedule"

Horizontal Pod Autoscaler

1apiVersion: autoscaling/v2 2kind: HorizontalPodAutoscaler 3metadata: 4 name: model-server-hpa 5spec: 6 scaleTargetRef: 7 apiVersion: apps/v1 8 kind: Deployment 9 name: model-server 10 minReplicas: 2 11 maxReplicas: 10 12 metrics: 13 - type: Resource 14 resource: 15 name: cpu 16 target: 17 type: Utilization 18 averageUtilization: 70 19 - type: Pods 20 pods: 21 metric: 22 name: requests_per_second 23 target: 24 type: AverageValue 25 averageValue: 100

Service & Ingress

1apiVersion: v1 2kind: Service 3metadata: 4 name: model-service 5spec: 6 selector: 7 app: model-server 8 ports: 9 - port: 80 10 targetPort: 8000 11 type: ClusterIP 12--- 13apiVersion: networking.k8s.io/v1 14kind: Ingress 15metadata: 16 name: model-ingress 17 annotations: 18 nginx.ingress.kubernetes.io/rate-limit: "100" 19spec: 20 rules: 21 - host: model.example.com 22 http: 23 paths: 24 - path: / 25 pathType: Prefix 26 backend: 27 service: 28 name: model-service 29 port: 30 number: 80

MLOps Pipeline

CI/CD Pipeline

1# .github/workflows/mlops.yml 2name: MLOps Pipeline 3 4on: 5 push: 6 branches: [main] 7 8jobs: 9 test: 10 runs-on: ubuntu-latest 11 steps: 12 - uses: actions/checkout@v3 13 - name: Run tests 14 run: pytest tests/ 15 16 train: 17 needs: test 18 runs-on: ubuntu-latest 19 steps: 20 - name: Train model 21 run: python train.py 22 - name: Evaluate model 23 run: python evaluate.py 24 - name: Register model 25 if: success() 26 run: python register_model.py 27 28 deploy: 29 needs: train 30 runs-on: ubuntu-latest 31 steps: 32 - name: Build image 33 run: docker build -t model:${{ github.sha }} . 34 - name: Push to registry 35 run: docker push myregistry/model:${{ github.sha }} 36 - name: Deploy to K8s 37 run: kubectl set image deployment/model model=myregistry/model:${{ github.sha }}

Model Registry

1import mlflow 2 3# Model kaydetme 4with mlflow.start_run(): 5 mlflow.log_params({"learning_rate": 0.001, "epochs": 10}) 6 mlflow.log_metrics({"accuracy": 0.95, "f1": 0.93}) 7 mlflow.pytorch.log_model(model, "model") 8 9# Model yükleme 10model_uri = "models:/text-classifier/production" 11model = mlflow.pytorch.load_model(model_uri)

Canary Deployment

1apiVersion: networking.istio.io/v1alpha3 2kind: VirtualService 3metadata: 4 name: model-service 5spec: 6 hosts: 7 - model-service 8 http: 9 - route: 10 - destination: 11 host: model-service-v1 12 weight: 90 13 - destination: 14 host: model-service-v2 15 weight: 10

Monitoring

Prometheus Metrics

1from prometheus_client import Counter, Histogram, start_http_server 2 3PREDICTIONS = Counter('predictions_total', 'Total predictions', ['model', 'status']) 4LATENCY = Histogram('prediction_latency_seconds', 'Prediction latency') 5 6@LATENCY.time() 7def predict(input_data): 8 result = model(input_data) 9 PREDICTIONS.labels(model='v1', status='success').inc() 10 return result

Grafana Dashboard

Key metrics to monitor:

- Request rate (RPS)

- Latency (p50, p95, p99)

- Error rate

- GPU utilization

- Memory usage

- Model drift indicators

Sonuç

AI model deployment, modern MLOps pratikleri ile güvenilir ve ölçeklenebilir hale getirilebilir. Docker, Kubernetes ve CI/CD pipeline'ları bu sürecin temel bileşenleridir.

Veni AI olarak, kurumsal AI deployment çözümleri sunuyoruz. Projeleriniz için bizimle iletişime geçin.